And The Milkmaid by Johannes Vermeer is very racy

What is the Google Image Tool?

The tool is a way to demo Google’s Cloud Vision API. Cloud Vision API is a cloud service that can allow you to add image analysis features to apps and websites.

The tool itself allows you to upload an image and it tells you how Google’s machine learning algorithm interprets it.

These are seven ways Google’s image analysis tools classifies uploaded images:

Faces

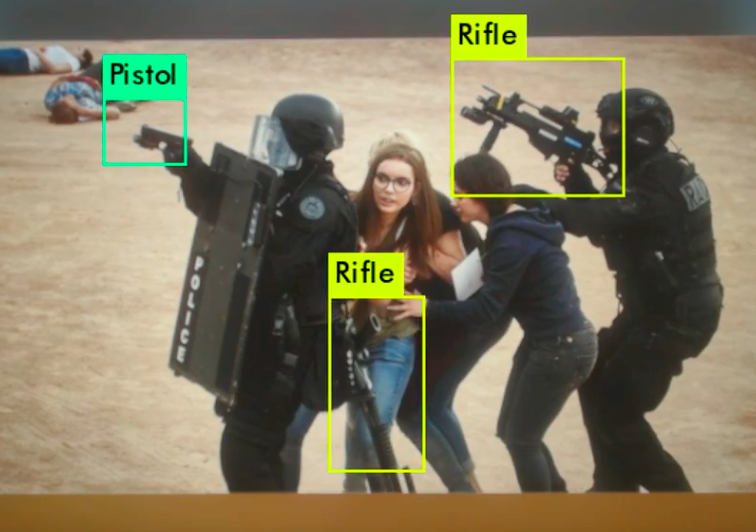

Objects

Labels

Web Entities

Text

Properties

Safe Search

As you can see below, John Mueller is clearly smiling in the image but Google’s image analysis tool didn’t catch it.

ADULT

SPOOF

MEDICAL

VIOLENCE

RACY

##

#

#

##

###

ADULT

SPOOF

MEDICAL

VIOLENCE

RACY

#

#

#

#

#

"[T]he AI systems was more likely to associate European American names with pleasant words such as 'gift' or 'happy' while African American names were more commonly associated with unpleasant words"

Hannah Devlin

Between our goals and the machine's

part of what humans value in AI-powered machines is their efficiency and effectiveness. But, if we aren't clear with the goals we set for AI machines, it could be dangerous if a machine isn't armed with the same goals we have. For example, a command to "Get me to the airport as quickly as possible" might have dire consequences. Without specifying that the rules of the road must be respected because we value human life, a machine could quite effectively accomplish its goal of getting you to the airport as quickly as possible and do literally what you asked, but leave behind a trail of accidents.

original photo

edited photo

Social media has a terrorism problem. From Twitter’s famous 2015 letter to Congress that it would never restrict the right of terrorists to use its platform, to its rapid about-face in the face of public and governmental outcry, Silicon Valley has had a change of heart in how it sees its role in curbing the use of its tools by those who wish to commit violence across the world. Today Facebook released a new transparency report that emphasizes its efforts to combat terroristic use of its platform and the role AI is playing in what it claims are significant successes. Yet, that narrative of AI success has been increasingly challenged, from academic studies suggesting that not only is content not being deleted, but that other Facebook tools may actually be assisting terrorists, to a Bloomberg piece last week that demonstrates just how readily terrorist content can still be found on Facebook. Can we really rely on AI to curb terroristic use of social media?

The same year Twitter rebuffed Congress’ efforts to reign in terroristic use of social media, I was presenting to a meeting of senior Iraqi and regional military and governmental leadership when I was asked for my perspective on how to solve what was even then a rapid transformation of recruitment and propaganda tradecraft by terrorist organizations. The resulting vision I outlined of bringing the “wilderness of mirrors” approach of counter intelligence to the social media terrorism domain received considerable attention at the time and outlined the futility of the whack-a-mole approach to censoring terrorist content.

Yet, Facebook in particular has latched onto the idea of using AI to delete all terroristic content across its platform in realtime. Despite fighting tooth and nail over the past several years to avoid releasing substantive detail on its content moderation efforts, Facebook today released some basic statistics about its efforts that include a special focus on its counter-terrorism efforts. As with each major leap in action or transparency, it seems increasing government and press scrutiny of Facebook has finally paid off.

As with all things Silicon Valley, every word in these reports counts and it is important to parse the actual statements carefully. Facebook, to its credit, was especially clear on just what it is targeting. It headlined its counter-terrorism efforts as “Terrorist Propaganda (ISIS, al-Qaeda and affiliates),” reinforcing that these are the organizations it is primarily focused on. Given that these are the groups that Western governments have put the most pressure on Silicon Valley to remove, the effect of government intervention on forcing social media companies to act is clear.

In its report, Facebook states specifically “in Q1 we took action on 1.9 million pieces of ISIS, al-Qaeda and affiliated terrorism propaganda, 99.5% of which we found and flagged before users reported them to us.” This statement makes clear that the overwhelming majority of terrorism-related content deleted by Facebook was flagged by its AI tools, but says nothing about what percent of all terrorism content on the platform the company believes it has addressed.

In contrast, the general public would be forgiven for believing that Facebook’s algorithms are vastly more effective. The New York Times summarized the statement above as “Facebook’s A.I. found 99.5 percent of terrorist content on the site, leading to the removal of roughly 1.9 million pieces of content in the first quarter,” while the BBC offered “the firm said its tools spotted 99.5% of detected propaganda posted in support of Islamic State,

Al-Qaeda and other affiliated groups, leaving only 0.5% to the public.” In fact, this is not at all what the company has claimed.

When asked about similar previous media characterizations of its counter-terrorism efforts, a company spokesperson clarified that such statements are incorrect, that the 99% figure refers exclusively to the percent of terrorist content deleted by the company that had been flagged by AI.

Of course, there is little Facebook can do to correct the mainstream media’s misunderstanding and mischaracterization of its efforts, but at the least perhaps the company could in future request that outlets correct such statements to ensure the public understands the actual strengths and limits of Facebook’s efforts.

It is also important to recognize that the overwhelming majority of this deleted content is not the result of powerful new advances in AI that are able to autonomously recognize novel terrorism content. Instead, they largely result from simple exact duplicate matching, in which once a human moderator deletes a piece of content, it is added to a site-wide blacklist that prevents other users from reuploading it. A company spokesperson noted that photo and video removal is currently performed through exact duplicate matching only, while textual posts are also removed through a machine learning approach.

It is unclear why the company took so long to adopt such exact duplicate removal technology. When asked previously, before it launched the tools, why it was not using such widely deployed fingerprinting technology to remove terrorist content, the company did not offer a response. Only under significant governmental pressure did it finally agree to adopt fingerprint-based removal, again reinforcing the role policymakers can have on forcing Silicon Valley to act.

Thus, while Facebook tells us that the majority of terroristic content it deletes is now based on algorithms that prevent previously deleted content from being reuploaded and machine flagging of textual posts, we simply have no idea whether Facebook’s algorithms are catching even the slightest fraction of terroristic material on its site. More importantly, we have no idea how much of the “terrorism” content it deletes actually has nothing to do with terrorism and is the result of human or machine error. The company simply refuses to tell us.

It is clear, however, that even the most basic human inspection of the site shows its approach is limited at best. Bloomberg was able to rapidly locate various terroristic content just from simple keyword searches in a handful of languages. One would think that if Facebook was serious about curbing terroristic use of its platform, they would have made it harder to find terrorism material than just typing a major terrorist group’s name into the search bar.

When asked about this, a company spokesperson emphasized that terrorism content is against its rules and that it is investing in doing better, buy declined to comment further.

When asked why Facebook doesn’t just type the name of each major terrorist group into its search bar in a few major languages and delete the terrorist content that comes back, the company again said it had no comment beyond emphasizing that such material is against its rules.

Therein lies perhaps the greatest pitfall of Facebook’s AI-centric approach: the overreliance and trust in machines without humans verifying the results and the focus on dazzling technologically pioneering approaches over the most basic simple approaches that get you much of the way there.

All the pioneering AI advances in the world are of limited use if users can just type a terrorist group’s name into the search bar to view all their propaganda and connect with them to join up or offer assistance.

Yet, in its quest to find an AI solution to every problem, Facebook all too often misses the simpler solution, while the resulting massively complex and brittle AI has unknown actual accuracy.

It makes one wonder why no one at Facebook ever thought to just search for terrorist groups by name in their search bar. Does this represent the same kind of stunning failure of imagination the company claims was behind its lack of response to Russian election interference? Or is it simply a byproduct of AI-induced blindness in which the belief that AI will solve all society’s ills blinds the company to the myriad issues its AI is not fixing?

The company has to date declined to break out the numbers of what percent of the terrorism content it deletes is simple exact duplicate matching versus actual AI-powered machine learning tools flagging novel material. We therefore have no idea whether its massive investments in AI and machine learning have actually had any kind of measurable impact on content deletion at all, especially given a spokesperson’s emphasis that video and image content (which constitutes a substantial portion of ISIS-generated content) is deleted only through exact matching of material previously identified by a human, not AI flagging of novel content.

Thus, from the media narrative of pioneering new deep learning advances powering bleeding edge AI algorithms that delete 99% of terrorism content, we have the actual reality of the far more mundane story of exact duplicate matching preventing 99% of reuploads and perhaps some machine learning text matching thrown in for good measure. We have no idea what percent of actual terrorism content this represents or how much non-terrorism content is mistakenly deleted. The fact that the company has repeatedly declined to comment on its false positive rate while touting other statistics offers little confidence that the company believes its algorithms are sufficiently accurate to feel comfortable commenting.

In similar fashion, Twitter has repeatedly declined to provide detail on its own similar content moderation initiatives.

Most frightening of all is that we, the two billion members of Facebook’s walled garden or Twitter’s 336 million monthly active members, have no understanding and no right to understand how our conversations are being steered or shaped. As the companies increasingly transition from passive neutral communications channels into actively moderated and controlled mediums, they are silently controlling what we can say and what we are aware of without us ever knowing.

It is remarkable that the very companies that once championed free speech are now censoring and controlling speech with the same silent heavy-handed censorship and airbrushing that have defined the world’s most brutal dictatorships. Democracy cannot exist in a world in which a handful of elites increasingly control our information, especially as Facebook becomes an ever more central way in which we consume news.

Putting this all together, as Silicon Valley increasingly turns to AI to solve all the world’s problems, especially complex topics like hate speech and terrorism, they must ensure that their AI-first approach doesn’t blind them, whether those AI approaches are actually addressing the most common ways users might discover or engage with terroristic content and whether their AI tools are actually working at all. Companies should also engage more closely with the media that present their technologies to the public, ensuring accuracy in the portrayal of those tools, while companies could help by offering greater detail, including false positive rates. Instead of viewing the media as adversaries to be starved of information, companies could embrace them as powerful vehicles to help convey their stories and the very real complexities of moderating the digital lives of a global world. In the end, however, it is important to remember that we are rapidly embarking down a path of consolidation in which a few elites wielding AI algorithms now increasingly wield absolute power over our digital lives, from what we say to what we can see, turning the democratic vision of the early web into the dystopian nightmare of the most frightening science fiction.

Kalev Leetaru

Kalev Leetaru

Contributor

Global catastrophic risks

Artificial intelligence / Warfare and mass destruction

The problem here is with the lack of diversity of people and opinions in the databases used to train AI, which are created by humans.

"we had this problem with our database for wrinkle estimation, for example", said Konstantin Kiselev, chief technology officer of Youth Laboratories, in an interview. "Our database had a lot more white people than, say, Indian people. Because of that, it's possible that our algorithm was biased".

It happens to be that color does matter in

machine vision

since machines can collect, track and analyze so much about you, it's very possible for those machines to use that information against you. It's not hard to imagine an insurance company telling you you're not insurable based on the number of times you were caught on camera talking on your phone. An employer might withhold a job offer based on your "social credit score."

Any powerful technology can be misused. Today, artificial intelligence is used for many good causes including to help us make better medical diagnoses, find new ways to cure cancer and make our cars safer. Unfortunately, as our AI capabilities expand we will also see it being used for dangerous or malicious purposes. Since AI technology is advancing so rapidly, it is vital for us to start to debate the best ways for AI to develop positively while minimising its destructive potential.

That ai recognition systems have potential but are not yet able to be used in our "current" society.

Because now we often do not know how recognition systems have come to a certain decision. And that we should therefore not rely on systems that recognize stereotypes based on appearance and highly risk-groups.

By developing our own recognition system through our own database, which recognizes potential danger. This allows people to look behind the scenes, how and what a recognition system can do in a simple way.

We are showing how certain decisions have turned out so well through smart systems.

This project shows what the unlimited powers are and what is wrong with the recognition systems.

We found out

213 images of ammunition

202 images of terrorists

185 images of rifles

205 images of pistols

195 images of tanks